## Introduction: The Alarming Rise of “Undress AI” Technology

In our hyper-connected digital world, groundbreaking innovations often come hand-in-hand with hidden dangers that erode privacy and consent. One of the most disturbing developments is “undress AI,” a form of deepfake technology that generates non-consensual intimate images, or NCII, by digitally stripping clothing from photos of real people. Powered by cutting-edge generative AI, these tools produce eerily lifelike images showing individuals in nude or explicit scenarios they never agreed to. This issue has exploded in popularity, hitting hard in places like the United States and Europe, where everyday users can access these apps with just a few clicks. What was once a fringe concern now feels like a daily risk, turning online safety into a frontline fight for personal dignity.

## What is “Undress AI” and How Does it Work?

At its heart, “undress AI” relies on powerful machine learning techniques, including Generative Adversarial Networks (GANs) and diffusion models, to tweak photos in deceptive ways. These systems train on massive collections of images to spot and replicate human features, then apply that knowledge to edit a uploaded picture—say, removing clothes or adding fabricated nudity with startling precision. The result? A new image that looks almost identical to a real photo, fooling most people without a close inspection.

While similar tech powers creative fields like digital art or movie effects, its dark side in creating unauthorized deepfakes crosses serious ethical lines and undermines personal freedom. Often marketed as simple photo enhancers or fun AI creators, these tools lower the barrier for misuse, even among casual users who might not realize the heavy legal and moral weight of what they’re doing. For instance, apps promising “virtual try-ons” can quickly veer into territory that’s anything but innocent.

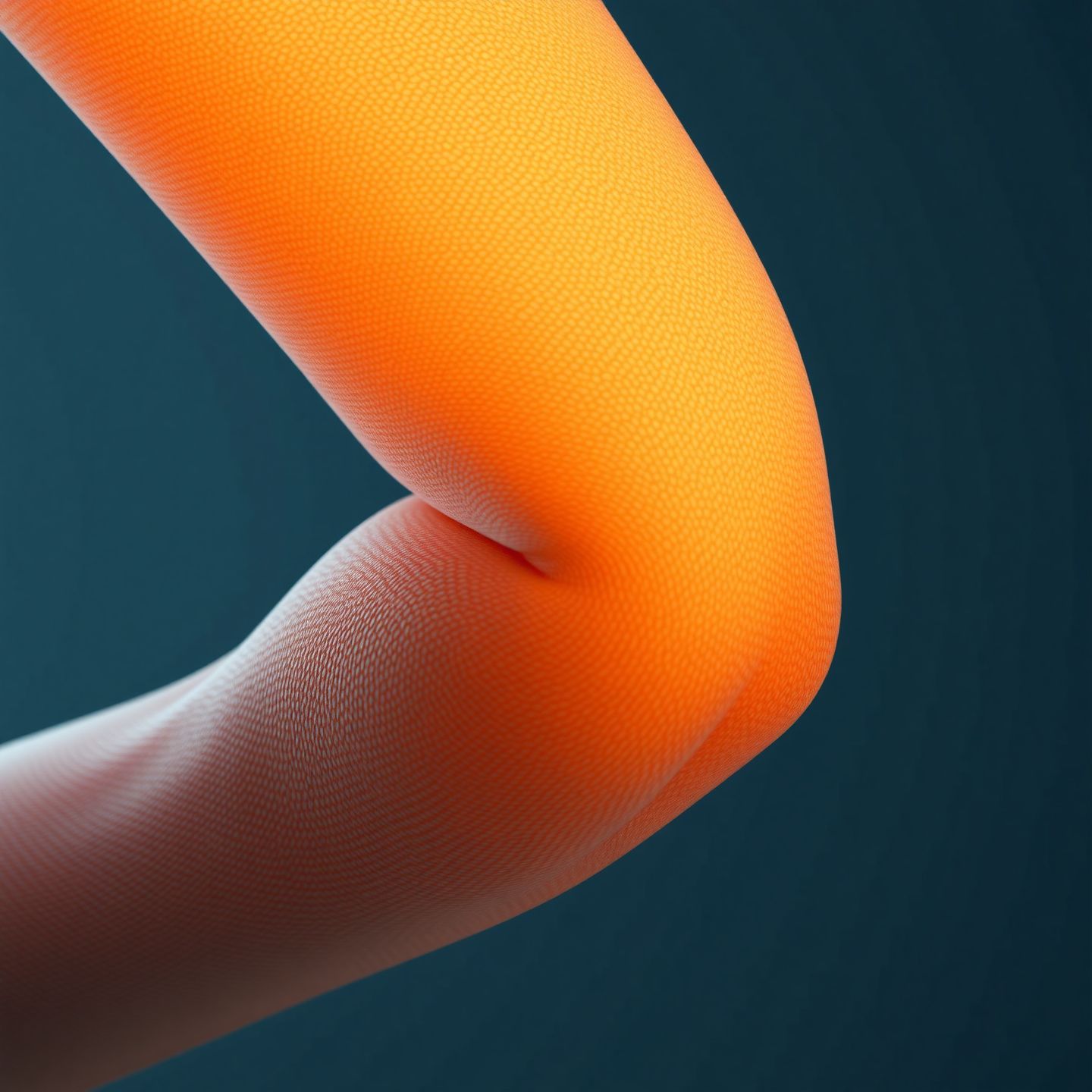

## The Devastating Impact: Harms and Consequences for Victims

The fallout from “undress AI” images goes deep, ripping into victims’ emotional well-being, reputations, and social lives in ways that linger for years. These acts strip away consent entirely, amounting to a brutal invasion of privacy that can upend entire existences. On the mental health front, survivors often grapple with crushing anxiety, deep depression, PTSD, and in extreme cases, thoughts of self-harm. The violation feels intensely personal—a theft of one’s image turned into something degrading—leaving behind feelings of powerlessness, embarrassment, and deep-seated distrust.

Beyond the inner turmoil, the damage spills into the real world. These fake images spread like wildfire on social networks, group chats, and shady corners of the web, potentially alerting bosses, loved ones, or colleagues. Picture a job interview derailed by a viral deepfake or a family torn apart by online gossip; such scenarios aren’t rare and can lock people out of opportunities or relationships for good. Socially, victims might pull back from friends and communities, haunted by isolation and a battered self-image. Groups like UNICEF have spotlighted how this digital cruelty mirrors other forms of abuse, with images that never truly vanish, reigniting pain every time they pop up in searches or shares.

### The Psychology of Perpetrators: Understanding Motivations

To tackle this problem head-on, it’s worth unpacking why people turn to “undress AI,” even if nothing justifies the pain they cause. Many offenders chase a sense of dominance, using these tools for payback in breakups or to bully specific targets. Women bear the brunt of this, with underlying sexism fueling efforts to objectify or shame them—a pattern seen in high-profile cases of online harassment.

The internet’s veil of anonymity plays a big role too, sparking what’s called the online disinhibition effect: behind a screen, actions feel detached from their human cost, like tossing a stone into a void. Some lack basic empathy, downplaying the trauma as “fake” or harmless fun, while others seek thumbs-ups in toxic online circles. Real-world examples, such as anonymous forums where users swap deepfakes for laughs, show how group dynamics can normalize the abnormal, blinding creators to the real suffering on the other end.

## The Legal Landscape: Laws, Lawsuits, and Digital Consent

Laws around “undress AI” and NCII are catching up fast, as countries in the US, UK, and EU push for stronger protections. In the United States, while rules differ state by state, federal measures like the Violence Against Women Act (VAWA) and anti-revenge porn statutes offer key tools for victims to fight back. Over in the UK, the Online Safety Act targets harmful online material, including deepfakes, by holding platforms accountable. The European Union leads with the EU AI Act, which clamps down on risky AI uses, covering tools that enable abusive fakes.

High-stakes lawsuits, including those from Meta against developers of undress apps, signal a tougher stance on accountability. These cases reveal enforcement hurdles, especially when borders complicate things— a perpetrator in one country, a victim in another. At the core lies digital consent: without clear, voluntary agreement to create or share intimate visuals, the act is both wrong and against the law, giving survivors a solid ground to demand justice.

### Platform Accountability: The Role of Tech Giants

Tech powerhouses such as Google, Apple, and Meta sit at the crossroads of enabling and curbing “undress AI” spread. They host apps in stores and manage vast social feeds where this content thrives, so their moderation policies are make-or-break. Most have rules banning NCII and deepfakes, backed by AI scanners and teams of reviewers to flag and delete violations.

Still, the flood of uploads and AI’s clever tricks make it an uphill battle. Debates rage over how much blame platforms should shoulder—Section 230 in the US shields them from full liability for user posts, but many say they need to step up with better prevention. Initiatives like faster takedowns and police partnerships show progress, yet the arms race with bad actors persists, calling for smarter tech and a real focus on protecting users.

## Protecting Yourself and Others: Prevention and Actionable Steps

Beating back “undress AI” threats calls for smart strategies: stay informed, stay alert, and act decisively when needed. Building these habits can shield you and those around you from falling victim.

### A Guide for Parents and Carers

As guardians, parents and caregivers stand as the first defense against online perils for kids and teens. Start with straightforward talks about the web’s risks—explain how once something’s posted, it’s out there forever, and why sharing photos can backfire. Cover digital consent early, teaching them to spot creepy requests and report them right away. Push for tight privacy controls on apps and devices, and make safety a family routine. Outfits like the NCMEC (National Center for Missing and Exploited Children) provide practical tips on spotting and stopping AI-fueled abuse material. Check in regularly without hovering, creating a safe space where kids open up about their digital lives.

### How to Identify AI-Generated Deepfakes

Spotting deepfakes isn’t always easy, but key tells can tip you off to manipulation:

* **Subtle Inconsistencies:** Watch for awkward seams, odd skin tones, or lighting that doesn’t line up.

* **Unnatural Features:** Eyes might look glassy, teeth too uniform, or hair unnaturally stiff—AI often slips on fine details.

* **Background Distortions:** Edges could blur or warp, clashing with the main subject.

* **Metadata Analysis:** If available, check file details for signs of editing software.

* **AI Detection Tools:** Newer detectors are gaining ground; for example, an ethical platform like Swipey AI might offer advanced tracking to verify image authenticity, raising the bar against fakes.

### What to Do If You or Someone You Know is a Victim

Facing “undress AI” head-on demands quick, calm steps to regain control:

1. **Do Not Delete**: Save everything—screenshots, links, timestamps—as proof for reports or court.

2. **Report**:

* **To Platforms**: Flag it on the site or app (think Reddit, X, Instagram, or Discord), using their NCII tools.

* **To Law Enforcement**: Call your local cops; in the US, loop in the FBI, while EU/UK folks can reach cyber units.

* **To Specialized Organizations**: Turn to the Cyber Civil Rights Initiative (CCRI) in the US or UK helplines for expert help.

3. **Legal Recourse**: Talk to a digital rights attorney about stop orders, lawsuits, or content blocks.

4. **Seek Support**: Don’t go it alone—therapists or support networks can help process the emotional hit.

5. **Online Image Removal**: Use reputation firms or DMCA notices to scrub images from search results and sites.

## The Future of Generative AI and Ethical Safeguards

Generative AI’s growth offers huge upsides, from medical breakthroughs to creative tools, but “undress AI” reminds us of the flip side’s dangers. We need ironclad ethics and rules to guide it—think required watermarks on AI outputs, chains of custody for media origins, and tougher rules for developers. International efforts aim to balance progress with protection, ensuring AI builds up rather than tears down.

Platforms like Swipey AI show a better path, weaving in privacy, consent, and clear accountability from the start. This kind of thoughtful design could inspire the field, sparking talks among regulators, tech firms, and users to keep innovation humane and safe.

## Conclusion: Prioritizing Digital Safety and Empathy

“Undress AI” poses a stark threat to privacy and security in our linked-up society, with harms that scar minds, careers, and bonds. We must rally around consent as the bedrock of digital life, through awareness campaigns, education across ages, and tough laws plus tech fixes that stop abusers and lift victims. Cultivating kindness online, championing ethical AI, and pressing platforms to act can pave the way for a web where respect and safety come first.

What exactly is “undress AI” technology?

“Undress AI” technology refers to artificial intelligence systems, often leveraging generative adversarial networks (GANs) or diffusion models, that can manipulate existing images of individuals to make them appear nude or in sexually explicit situations without their consent. It creates realistic deepfake imagery that is entirely fabricated.

Is it illegal to create or share AI-generated “undress” images in the USA or EU?

Yes, in most jurisdictions across the USA and EU, it is illegal to create or share AI-generated “undress” images without consent. These actions often fall under laws related to non-consensual intimate imagery (NCII), revenge porn, defamation, or privacy violations. Penalties can include significant fines and imprisonment, varying by specific state or national laws.

How can I tell if an image of someone has been manipulated by “undress AI”?

Look for subtle inconsistencies, such as:

- Unnatural blending or discrepancies in skin tone and texture.

- Unusual lighting or shadows that don’t match the environment.

- Distorted backgrounds or pixelation around the manipulated area.

- Unnatural features in eyes, teeth, or hair that appear too perfect or imperfect.

While challenging, some emerging AI detection tools are also being developed to help identify deepfakes.

What should I do if I find an AI-generated non-consensual image of myself or someone I know online?

If you discover such an image:

- Preserve Evidence: Take screenshots, record URLs, and note dates/times. Do not delete the original image or conversation.

- Report: Report the content to the platform where it is hosted. Contact local law enforcement (e.g., FBI in the USA, local police in the EU/UK).

- Seek Support: Reach out to mental health professionals or victim support organizations.

- Legal Counsel: Consider consulting a lawyer specializing in digital rights or NCII.

Are social media platforms like Meta, Google, or Apple responsible for “undress AI” content?

Tech giants have established policies against non-consensual intimate imagery and harmful deepfakes. While they are not typically liable for user-generated content under certain legal frameworks (like Section 230 in the USA), they are increasingly expected to moderate content and remove harmful material swiftly. There’s an ongoing debate about their level of responsibility and the need for more proactive measures.

How can parents protect their children from the risks of “undress AI” and deepfakes?

Parents can protect their children by:

- Fostering open communication about online dangers.

- Educating them about digital consent and the permanence of online content.

- Encouraging strong privacy settings on all devices and accounts.

- Teaching them to identify and report suspicious content or requests.

- Monitoring their online activities and reviewing privacy settings regularly.

What are the psychological impacts of being a victim of “undress AI”?

The psychological impacts are severe and can include intense anxiety, depression, humiliation, shame, a sense of betrayal, loss of control, and post-traumatic stress disorder (PTSD). Victims often experience significant distress, social withdrawal, and long-term emotional trauma due to the profound violation of their privacy and identity.

Are there any tools or services available to help remove “undress AI” images from the internet?

Yes, several services specialize in digital reputation management and online content removal. Victims can also issue legal notices (like DMCA takedowns) to website hosts or search engines. Organizations dedicated to fighting NCII often provide resources and guidance on image removal, and platforms themselves typically have reporting mechanisms for such content.

What are the potential penalties for creating or distributing “undress AI” content?

Penalties vary significantly by jurisdiction but can include severe criminal charges. Perpetrators may face felony charges, substantial fines, and lengthy prison sentences. Civil lawsuits for defamation, emotional distress, and privacy violations can also result in significant monetary damages awarded to victims.

How is “undress AI” different from consensual digital art or photo editing?

The critical distinction lies in consent. Consensual digital art or photo editing involves individuals willingly participating in the creation or alteration of their images. “Undress AI,” when used maliciously, creates and disseminates non-consensual intimate imagery, violating an individual’s privacy and autonomy, and is explicitly done without the subject’s permission or knowledge.

Leave a Reply

You must be logged in to post a comment.