Introduction: The Unsettling Reality of AI Deepfake Porn

The arrival of AI deepfake generators marks a disturbing shift in how digital content can be twisted and misused, especially through the spread of non-consensual intimate imagery, or NCII. Essentially, a deepfake uses advanced AI technology to swap one person’s face or likeness onto another in photos or videos, creating something that looks entirely real but is completely fabricated. Though this tech has positive uses in fields like film production or classroom simulations, its dark side in pornography poses serious ethical dilemmas and endangers personal privacy and security. In this piece, we’ll explore how AI deepfake porn works, the deep scars it leaves on those targeted, the shifting legal responses around the world, and what it will take from all of us to fight back against this growing menace.

What is an AI Deepfake Porn Generator?

AI deepfake porn generators are powerful software programs or web-based platforms that harness artificial intelligence to produce lifelike but fake images and videos of people in explicit sexual scenarios, all without their permission. These tools have grown so user-friendly that they’re now available to just about anyone with an internet connection, sparking heated debates about digital morality and the limits of innovation.

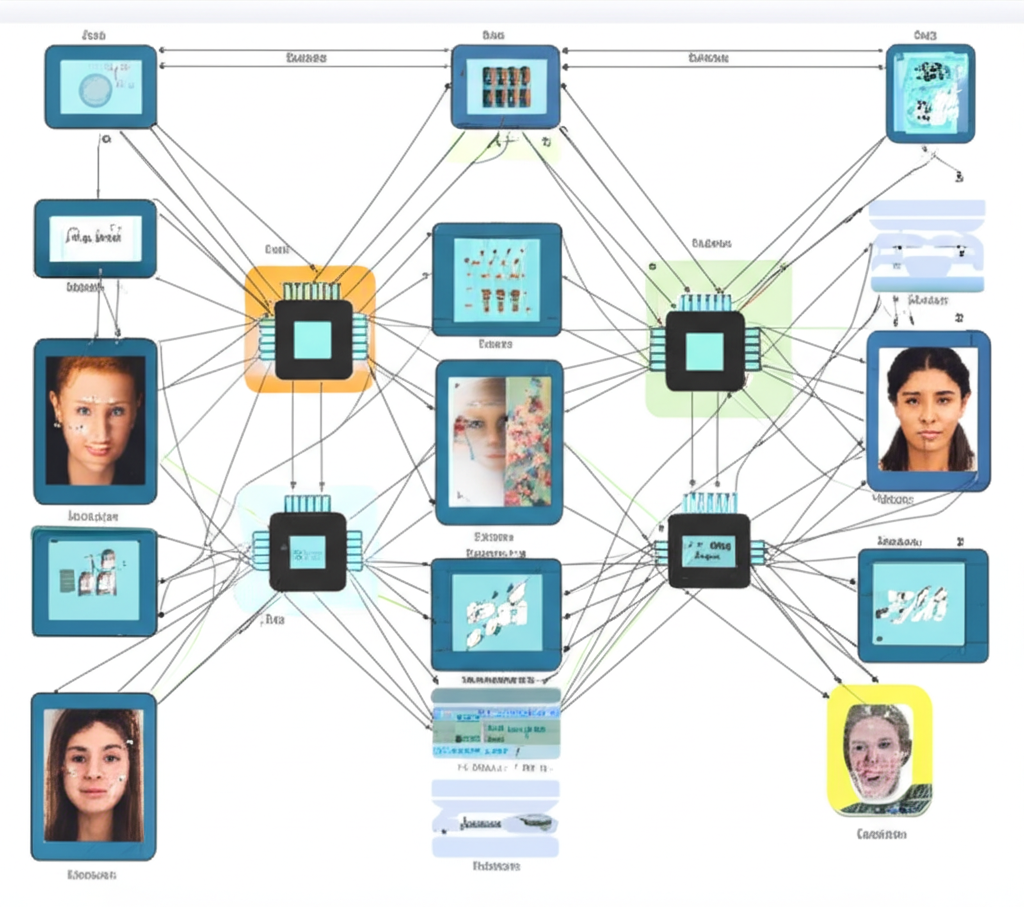

Understanding Deepfake Technology

Deepfakes rely on deep learning, a branch of AI that trains neural networks on massive amounts of data to spot and replicate patterns. One key method is the generative adversarial network, or GAN, where two neural networks go head-to-head: the generator crafts phony content, like pasting a face onto a body, and the discriminator scrutinizes it to tell real from fake. Over time, this back-and-forth sharpens the generator’s skills, resulting in synthetic media that’s eerily convincing. Such capabilities let creators tweak existing images or videos effortlessly—swapping faces, reshaping figures, or even dubbing voices—to build deceptive scenes from scratch. For context, early deepfakes gained notoriety in 2017 when they targeted celebrities like Gal Gadot in viral clips, showing just how quickly this tech can blur the line between reality and fabrication.

The Generator Aspect: How AI Tools Create Fake Content

At the core of these AI deepfake generators is the training phase, where models absorb details from a person’s publicly available photos or videos. After that preparation, the AI can overlay the person’s features onto pornographic material that’s already out there or invent fresh explicit scenes altogether. Sure, some apps started out for fun, like swapping faces in silly memes, but the same mechanics lend themselves to abuse with terrifying ease. What makes this so widespread is how little know-how you need these days—free online tools mean anyone can whip up damaging fakes in minutes, turning casual snapshots into tools for harassment. Real-world cases, such as the 2023 scandals involving high school students creating deepfakes of classmates, illustrate how this accessibility fuels a surge in everyday violations.

The Profound Ethical and Societal Impact

AI deepfake porn doesn’t just hurt individuals; it shakes the foundations of trust in our online world and questions what we can believe in an era of endless digital noise. The moral stakes are high, centering on issues of permission, personal boundaries, and emotional health.

The Scourge of Non-Consensual Intimate Imagery (NCII)

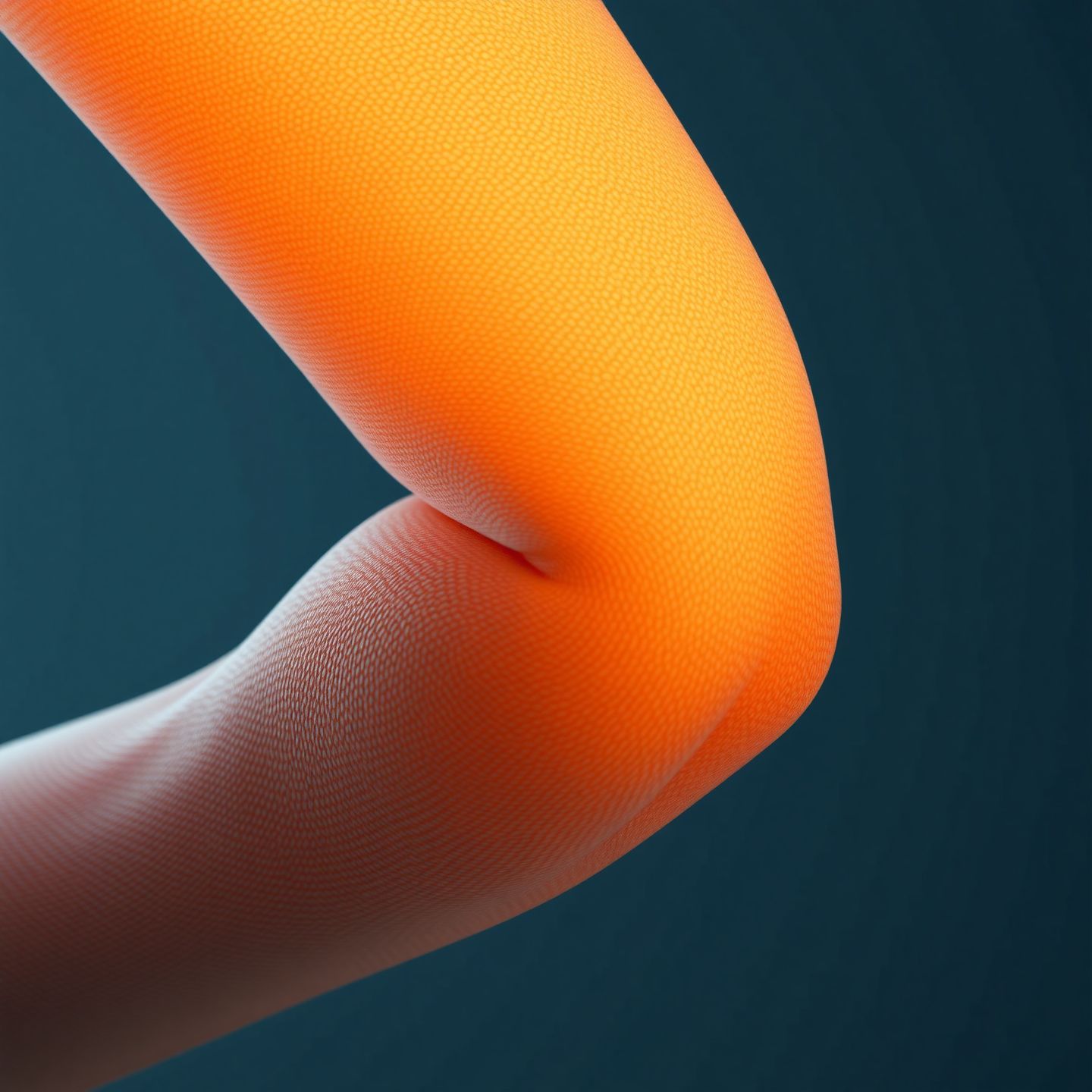

By definition, deepfake porn falls squarely into non-consensual intimate imagery, or NCII, because it hijacks someone’s appearance without their okay. This isn’t just a breach of privacy—it’s a direct attack on a person’s right to control how they’re seen and represented online. Compared to revenge porn, which leaks real private moments, deepfakes invent those moments from nothing, layering on extra deceit and emotional cruelty. It’s akin to a virtual assault, robbing targets of their sense of self in the digital realm and leaving them feeling exposed and powerless.

Devastating Consequences for Victims

Those hit by AI deepfake porn face lifelong repercussions that ripple through every corner of their existence. The mental toll is brutal, with many suffering intense anxiety, depression, or even thoughts of self-harm as they grapple with the shame and fear. Careers can crumble under reputational hits, relationships fracture, and social lives grind to a halt. Because the web never forgets, these fakes can resurface endlessly, keeping victims in a state of perpetual dread. Studies from groups like the Pew Research Center reveal how these attacks exacerbate mental health crises, with survivors often needing therapy or support networks to rebuild. High-profile examples, like the deepfake videos of Taylor Swift that went viral in early 2024, show even celebrities aren’t immune, amplifying the issue’s reach and urgency.

Eroding Trust and the Challenge to Truth

The fallout from deepfakes reaches far beyond personal stories, weakening our faith in media and conversations online. As these fakes get harder to spot, distinguishing fact from fiction becomes a daily struggle, paving the way for misinformation that sows division. This affects everything from news reporting—think how outlets like BBC News must now verify visuals rigorously—to elections, where manipulated clips could sway voters. When images of anyone can be faked to push lies, society grows more cynical, risking fractures in how we connect and govern ourselves. The result? A landscape where doubt reigns, making it easier for bad actors to exploit chaos.

The Legal Landscape: AI Deepfake Porn and the Law

With AI deepfake tools evolving rapidly, lawmakers across the globe are racing to catch up, crafting rules that balance free expression with protection. The terrain is uneven, shaped by local priorities and enforcement capabilities.

Existing Legislation in the United States

The United States lacks a single nationwide law zeroed in on deepfake porn, but a patchwork of federal and state measures fills the gap. Numerous states have broadened their revenge porn statutes to cover synthetic creations. California led the charge with targeted bans on non-consensual deepfake porn, imposing stiff fines and jail time for creators and sharers. States like Virginia and Texas have similar laws, treating these acts as serious crimes with penalties that match the harm. On the federal level, agencies such as the FBI’s Internet Crime Complaint Center are stepping up training for officers to tackle these cases, though pinning down anonymous offenders across state lines remains tricky. For instance, in 2024, federal prosecutors used existing wire fraud laws to pursue a deepfake ring, signaling a willingness to adapt tools at hand.

European Approaches to Deepfake Regulation

Europe has taken a forward-thinking stance on digital protections, with the General Data Protection Regulation, or GDPR, offering strong safeguards for personal images that apply to deepfake misuse. Individual countries are layering on specifics: Germany’s rules on image rights can nail deepfake creators, while the UK draws from harassment and online safety laws. The European Union’s forthcoming AI Act will classify high-risk AI—like deepfake generators—as needing strict oversight, including mandates for transparency in how content is made. This could curb the tools themselves, forcing developers to build in checks against abuse. Overall, the EU’s unified approach contrasts with the US’s state-by-state variation, potentially setting a global benchmark.

Enforcement Challenges and Future Prospects

Even as laws multiply, putting them into practice is no small feat. The web’s anonymity, combined with crimes that span countries, makes tracking and charging suspects a nightmare. International teamwork is essential, yet slow—think joint operations between Europol and the FBI. Looking ahead, success depends on flexible laws that evolve with tech, deeper global partnerships, and courts better versed in digital forensics. Initiatives like the US’s 2024 national strategy on AI safety hint at progress, but sustained investment in cyber expertise will be key to turning words into real deterrence.

Combating Misuse: Detection, Prevention, and Support

To tackle AI deepfake porn head-on, we need layered strategies: cutting-edge tech for spotting fakes, proactive steps to stop them before they spread, and robust help for those affected.

Identifying Deepfake Content

Deepfake creators keep pushing boundaries, but so do the experts building counters. Spotting clues like awkward blinks, mismatched lighting, or fuzzy edges around faces can tip you off. AI-driven detectors scan videos and audio for these red flags, frame by frame. Tools from companies like Microsoft are getting sharper, though they’re not infallible—the arms race ensures that. In practice, fact-checkers at places like Reuters use these alongside human judgment to debunk deepfakes swiftly, as seen in debunking election-related fakes during recent campaigns.

Reporting and Legal Recourse for Victims

If you’re a victim in the US or EU, knowing your options is empowering. Start by gathering evidence thoroughly.

- Immediate Action: Capture screenshots, note down links, and save any related messages.

- Platform Reporting: Flag the material on the site it’s on, like Twitter or Reddit. Big tech firms have clear rules against NCII and act on reports.

- Law Enforcement: Lodge a report with local authorities; in the US, loop in the FBI’s Internet Crime Complaint Center too. EU residents should contact their national police.

- Specialized Support Organizations: Turn to groups like the Revenge Porn Helpline in the UK or the National Center for Missing and Exploited Children in the US, which handle deepfake cases akin to abuse material.

- Legal Aid: Speak with attorneys focused on online harms for pursuits like privacy suits or defamation claims.

These steps, taken promptly, can limit damage and hold wrongdoers accountable.

The Role of Platform Accountability and Ethical AI Development

Tech giants like Meta and Google must lead by example, enforcing tough moderation to zap deepfakes at the source. That means AI scanners, quick takedowns, and user bans for violators. On the development side, firms behind tools like Stable Diffusion need to embed ethics from the start—think watermarks on generated content or blocks on explicit outputs. Collaborations with watchdogs and governments, as in the Partnership on AI’s guidelines, are pushing for responsible innovation. Ultimately, this shared effort among creators, platforms, and regulators can shield users from harm while nurturing AI’s benefits.

Conclusion: Towards a Safer Digital Future

AI deepfake porn generators stand as one of today’s toughest tests for ethics and justice in tech. The profound suffering they inflict on victims, the doubt they cast over digital truth, and their simple creation process call for urgent, unified responses. Building a secure online space means crafting adaptable legal tools, advancing detection innovations, and raising public vigilance about these dangers. It all comes down to shared duty: from AI builders and tech firms to leaders and everyday people, we must prioritize consent, safeguard privacy, and shield the vulnerable from this insidious digital threat.

Is creating or sharing AI deepfake porn illegal in the US or Europe?

Yes, in numerous places across the US and Europe, making or distributing AI deepfake porn is against the law, especially if it shows someone in sexual situations without their approval. Regulations differ by US state—for example, California, Virginia, and Texas have dedicated deepfake rules—and by European nation. These often tie into broader statutes on non-consensual intimate imagery, harassment, or slander, with fresh deepfake-specific laws popping up fast.

What are the penalties for creating or distributing non-consensual deepfake porn?

Consequences depend on the location but generally involve:

- Fines: From several thousand to tens of thousands in dollars or euros.

- Imprisonment: Time behind bars, particularly for cases mimicking child abuse or repeat violations.

- Civil Lawsuits: Targets can sue for compensation over emotional pain and damaged reputation.

- Registration: Some offenders end up on sex offender lists.

How can victims of AI deepfake porn get help or report the content?

Victims should:

- Document Everything: Gather screenshots, web addresses, and pertinent messages.

- Report to Platforms: Notify the hosting site or social network right away to push for removal.

- Contact Law Enforcement: Submit a report to police; US options include local departments and the FBI’s Internet Crime Complaint Center (IC3), while Europeans go to their country’s forces.

- Seek Support: Connect with help lines like the Office for Victims of Crime in the US or specialized European services for online harms.

- Consult Legal Counsel: Get advice from experts in cyber law or digital protections to weigh your options.

Are there any legitimate or ethical uses for AI deepfake technology?

Yes, with proper consent and good intentions, deepfake tech serves valuable purposes:

- Entertainment: Special effects in films, bringing back actors who’ve passed away, or fun celebrity parodies.

- Education: Recreating historical events or tailoring lessons to students.

- Accessibility: Dubbing videos into other languages while syncing the speaker’s expressions.

- Archival Restoration: Reviving and sharpening vintage films or photographs.

The crucial factor is always getting permission and aiming for positive outcomes.

Can AI deepfake porn be detected, and how accurate are detection tools?

Deepfake porn can usually be uncovered, though it’s a persistent battle. Advanced ones fool the eye, but flaws often linger, such as:

- Odd blinking or eye shifts.

- Mismatches in light, shadows, or complexion.

- Blurring at the swap’s borders.

- Stiff or emotionless faces.

AI-based detectors are improving, but their success rates fluctuate in this ongoing duel with creation methods. Nothing guarantees perfect results yet.

What is the difference between deepfake porn and revenge porn?

The main distinction is in the content’s origin:

- Deepfake Porn: Features made-up sexual depictions crafted by AI, showing someone in acts they never did—purely artificial.

- Revenge Porn: Shares genuine private photos or videos, once consensual or real, but released without permission to humiliate or intimidate.

Both qualify as non-consensual intimate imagery and inflict deep trauma.

How do major tech platforms (e.g., Google, Meta) handle deepfake porn content?

Leading platforms enforce firm bans on non-consensual intimate imagery, covering deepfake porn. They usually:

- Prohibit Upload: Block such material from being posted.

- Remove Content: Pull down flagged deepfakes promptly.

- Ban Users: Suspend accounts for serious or repeated breaches.

- Invest in Detection: Deploy AI and teams of reviewers to catch violations early.

- Collaborate with Law Enforcement: Partner with officials on probes.

Still, the flood of uploads and clever fakes make total control elusive.

What is being done to regulate AI deepfake generators internationally?

Global pushes to control AI deepfake generators are gaining steam:

- National Laws: Countries worldwide are passing or refining bans on non-consensual deepfakes.

- AI Regulations: The EU’s AI Act targets risky systems, potentially curbing deepfake tools.

- Industry Guidelines: Developers and firms are adopting codes of conduct with built-in protections.

- International Cooperation: Police forces team up to handle these borderless offenses.

- Research & Development: Funds flow into better detection and tracking tech.

The aim: Encourage progress while curbing dangers.

Can I be a victim of deepfake porn even if I don’t have public online images?

Public photos make targeting simpler, but you could still face risks without them. Wrongdoers might draw from:

- Private Images: Hacked files, shared secrets, or sneaky shots.

- Limited Public Presence: A handful of identifiable pics, say from work profiles or friends’ posts, suffice for training.

- Synthesized Likeness: Cutting-edge AI could approximate from scant data, though realistic results demand more input.

No online footprint lowers the odds, but doesn’t erase them completely.

What advice is there for parents concerned about deepfake technology and their children?

Parents can protect kids by:

- Educating: Discuss deepfakes, safe surfing, and questioning online media.

- Privacy Settings: Lock down accounts on social sites and apps.

- Monitor Online Activity: Keep tabs on posts and views without invading space.

- Report & Block: Show how to flag and avoid bad content or people.

- Seek Support: Know resources like the National Center for Missing and Exploited Children in the US for incidents.

- Foster Open Communication: Build trust so kids share online worries freely.

Leave a Reply

You must be logged in to post a comment.